You are viewing an old revision of this post, from December 10, 2020 @ 20:02:39. See below for differences between this version and the current revision.

Recently, a leader in the AI field – someone whos books I recommend – had a fun discussion on Twitter by posting this:

The claim is simple: conferences should not reject papers on the basis of ethics concerns. Reasons for rejection should be restricted to ordinary reasons like “technical merit” and “novelty.”

I made of Dr. Pedro Domingos with a rather unfair joke – the point being that ethics have long been considered part of science. Perhaps I overstate – as one commentator noted, many of the ethics standards were in response to the Tuskgee PHS syphilis study (and the Nazis, of course). Scientists in that study lied to participants about the health care they were receiving, and caused many in the “control” condition to suffer unnecessarily in the interests of scientific practice.

My own perspective is that this is sufficient reason to have ethical review be part of a scientific (including conference) review process, especially for proceedings like NeurIPS that have an increasing set of applied papers.

But. But, but, but.

AI is Different

Artificial Intelligence, Machine Learning, Statistical Learning – whatever you want to call it, these systems enable the automation of decisions.

This is new.

Certainly, other things have affected the “alienation” of human decision making. Laws, policies, organizations that shield people from responsibility, etc all make it easy for a person to abstain from responsibility by while making extraordinarily damaging decisions. The Nuremberg trials – not to beat a dead horse – showed this in full writ, and later psychology experiments like the series than Stanley Milgrim pursued further explored how ordinary people could do monstrous things.

However, in all cases, a human being made the decision. They performed the precipitating action. They chose.

The goal we have for AI – certainly the goal I have for AI, when I apply these techniques in my work and career – is to remove the human from the decision process. To learn how to decide, and then to scale the decision making. A human might be able decide with 15 minutes consideration; a machine can do so in microseconds.

At the core, this is not so different from traditional programming. You write down code (e.g. a for loop) and you can repeat that action with machine precision. Still… when you do that, you write down the logic. We even have tools for formal verification (e.g. TLA+) that help explore the entire state space to ensure that nothing dangerous happens.

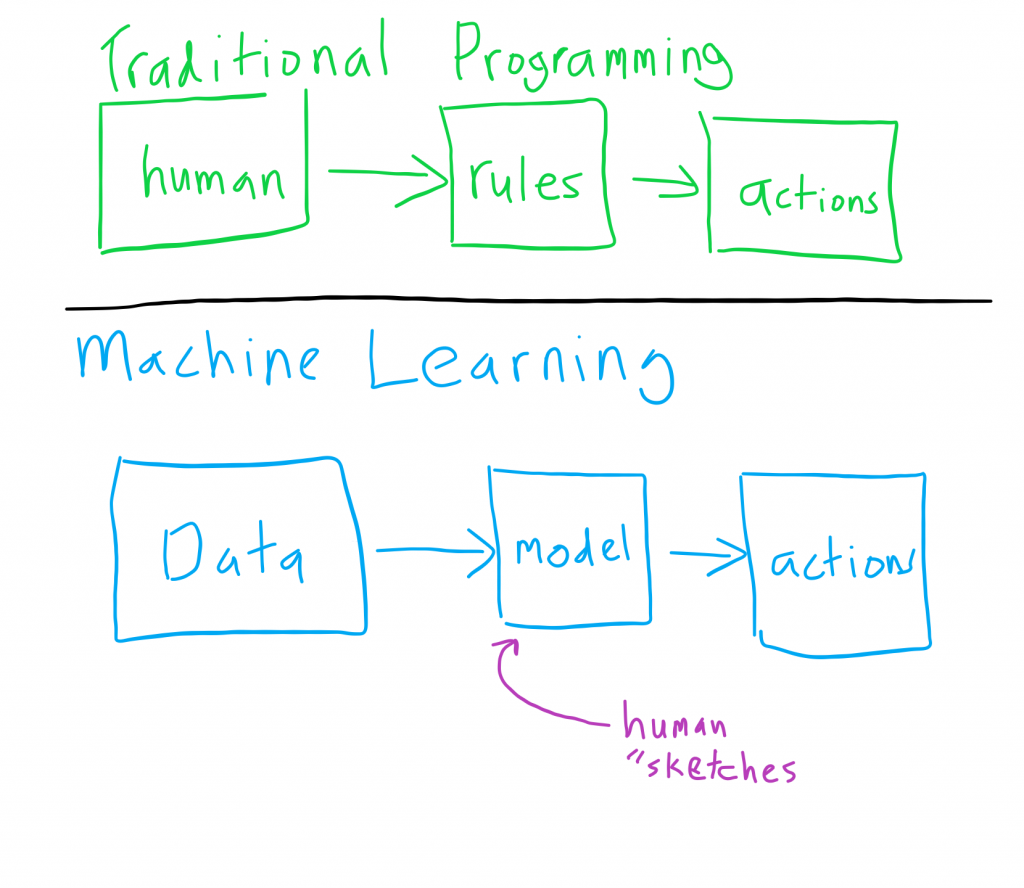

Machine learning is a little different. To re-use a common separation, we have:

In the traditional approach, people build the rules. In the machine learning approach – well, we don’t know the rules.

A human may “sketch” the model – design the neural network architecture, specify the functional form of the model – but the ACTIONS the model takes do not go by a human. There is rarely validation, exploration of the full state space, etc.

This is new – when we allow machine learning algorithms to make significant decisions, we should be aware that they might not reflect human judgment.

This is especially true for marginalized groups, or rare circumstances. Uncommon situations are not reflected in the training data … and since machine learning models tend to learn average behavior that can be deeply problematic. Olivia Guest offered a beautiful example in response to this kerfluffle – when a machine learning system was asked to “reconstruct” a blurred image, it made black women look white – and in one case changed the gender.

This is shocking! It should not be expected that our systems do this, and deploying them as-is is NOT OKAY.

We need to do better

If machine learning is going to have as big an impact as I would like it to have, we need to do better. I like the take the Ben Recht has on control systems and especially the kind of safety guarantees you need in places like aerospace engineering.

The bottom line is that machine learning has fantastic potential but is not quite reliable.

We can compare this to aerospace – the first “autopilot” system came out 8 years after the first place, in 1912. But it wasn’t until the mid-1960s that autopilot systems were really reliable; and they were not that widely used. Now it’s impossible to imagine flying a place (or a spacecraft…) without them.

All that said – ethical issues are core to making machine learning scalable, as in usable for the society we live in. We need to build systems that work – and that means they need to be safe and reliable. It is not sufficient to ignore obstacles to safety and reliability like dataset bias, etc. Not can (most of) these problems be shunted off to “model post-processing” – it is a core part of the system.

Post Revisions:

- December 10, 2020 @ 20:05:00 [Current Revision] by Michael Griffiths

- December 10, 2020 @ 20:04:57 by Michael Griffiths

- December 10, 2020 @ 20:03:48 by Michael Griffiths

- December 10, 2020 @ 20:02:39 by Michael Griffiths

Changes:

| December 10, 2020 @ 20:02:39 | Current Revision | ||

|---|---|---|---|

| Content | |||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>Recently, a leader in the AI field – someone whos books I | |||

| recommend – had a fun discussion on Twitter by posting this:</p> | <p>Recently, a leader in the AI field – Pedro Domingos, someone who's books I recommend – had a fun discussion on Twitter by posting this:</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:core-embed/twitter {"url":"https: //twitter.com/ pmddomingos/ status/1336187141366317056?s= 20","type":"rich" ,"providerNameSlug" :"twitter","className":""} --> | <!-- wp:core-embed/twitter {"url":"https: //twitter.com/ pmddomingos/ status/1336187141366317056?s= 20","type":"rich" ,"providerNameSlug" :"twitter","className":""} --> | ||

| <figure class="wp-block- embed-twitter wp-block-embed is-type-rich is-provider-twitter"><div class="wp-block- embed__wrapper"> | <figure class="wp-block- embed-twitter wp-block-embed is-type-rich is-provider-twitter"><div class="wp-block- embed__wrapper"> | ||

| https://twitter.com/ pmddomingos/status/ 1336187141366317056?s=20 | https://twitter.com/ pmddomingos/status/ 1336187141366317056?s=20 | ||

| </div></figure> | </div></figure> | ||

| <!-- /wp:core-embed/twitter --> | <!-- /wp:core-embed/twitter --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>The claim is simple: conferences should not reject papers <a href="https:/ /twitter.com/ pmddomingos/ status/1336810285940711424">on the basis of | <p>The claim is simple: conferences should not reject papers <a href="https:/ /twitter.com/ pmddomingos/ status/1336810285940711424">on the basis of | ||

| ethics concerns</a>. Reasons for rejection should be restricted to ordinary | ethics concerns</a>. Reasons for rejection should be restricted to ordinary | ||

| reasons like “technical merit” and “novelty.”</p> | reasons like “technical merit” and “novelty.”</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>I made of Dr. Pedro Domingos with a <a href="https:/ /twitter.com/ msjgriffiths/ status/1337147751948279810">rather | |||

| unfair joke</a> – the point being that ethics have long been considered part of | |||

| science. Perhaps I overstate – as one commentator noted, <a href="https:/ /twitter.com/ CSULBPhysGique/ status/1337050025390706691?s=20">many | <p>I made fun of Dr. Pedro Domingos with a <a href="https:/ /twitter.com/ msjgriffiths/ status/1337147751948279810">rather unfair joke</a> – the point being that ethics have long been considered part of science. Perhaps I overstate by saying "long" – as one commentator noted, <a href="https:/ /twitter.com/ CSULBPhysGique/ status/1337050025390706691?s=20">many of the ethics standards</a> were 1970s-era responses to the <a href="https:/ /en.wikipedia.org/ wiki/Tuskegee_ Syphilis_Study">Tuskgee PHS syphilis study</a> (<em>and the Nazis, of course</em>). Scientists in that study lied to participants about the health care they were receiving, and caused many in the “control” condition to suffer unnecessarily in the interests of scientific practice.</p> | ||

| of the ethics standards</a> were in response to the <a href="https:/ /en.wikipedia.org/ wiki/Tuskegee_ Syphilis_Study">Tuskgee PHS | |||

| syphilis study</a> (<em>and the Nazis, of course</em>). Scientists in that study | |||

| lied to participants about the health care they were receiving, and caused many | |||

| in the “control” condition to suffer unnecessarily in the interests of | |||

| scientific practice.</p> | |||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>My own perspective is that this is <em>sufficient</em> reason to | <p>My own perspective is that this is <em>sufficient</em> reason to | ||

| have ethical review be part of a scientific (<em>including conference</em>) | have ethical review be part of a scientific (<em>including conference</em>) | ||

| review process, especially for proceedings like NeurIPS that have an increasing | review process, especially for proceedings like NeurIPS that have an increasing | ||

| set of applied papers. </p> | set of applied papers. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>But. But, but, but.</p> | <p>But. But, but, but.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:heading {"level":1} --> | <!-- wp:heading {"level":1} --> | ||

| <h1>AI is Different</h1> | <h1>AI is Different</h1> | ||

| <!-- /wp:heading --> | <!-- /wp:heading --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>Artificial Intelligence, Machine Learning, Statistical | <p>Artificial Intelligence, Machine Learning, Statistical | ||

| Learning – whatever you want to call it, these systems enable the <strong>automation | Learning – whatever you want to call it, these systems enable the <strong>automation | ||

| of decisions</strong>. </p> | of decisions</strong>. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>This is new. </p> | <p>This is new. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>Certainly, other things have affected the “alienation” of | <p>Certainly, other things have affected the “alienation” of | ||

| human decision making. Laws, policies, organizations that shield people from | human decision making. Laws, policies, organizations that shield people from | ||

| responsibility, etc all make it easy for a person to abstain from | responsibility, etc all make it easy for a person to abstain from | ||

| responsibility by while making extraordinarily damaging decisions. The Nuremberg | responsibility by while making extraordinarily damaging decisions. The Nuremberg | ||

| trials – not to beat a dead horse – showed this in full writ, and later psychology | trials – not to beat a dead horse – showed this in full writ, and later psychology | ||

| experiments like the series than <a href="https:/ /en.wikipedia.org/ wiki/Milgram_ experiment">Stanley Milgrim pursued</a> | experiments like the series than <a href="https:/ /en.wikipedia.org/ wiki/Milgram_ experiment">Stanley Milgrim pursued</a> | ||

| further explored how ordinary people could do monstrous things.</p> | further explored how ordinary people could do monstrous things.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>However, <em>in all cases</em>, a human being made the | <p>However, <em>in all cases</em>, a human being made the | ||

| decision. They performed the precipitating action. They <em>chose</em>.</p> | decision. They performed the precipitating action. They <em>chose</em>.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>The goal we have for AI – certainly the goal <em>I have</em> | <p>The goal we have for AI – certainly the goal <em>I have</em> | ||

| for AI, when I apply these techniques in my work and career – is to remove the human | for AI, when I apply these techniques in my work and career – is to remove the human | ||

| from the decision process. To learn how to decide, and then to <em>scale the | from the decision process. To learn how to decide, and then to <em>scale the | ||

| decision making</em>. A human might be able decide with 15 minutes | decision making</em>. A human might be able decide with 15 minutes | ||

| consideration; a machine can do so in microseconds.</p> | consideration; a machine can do so in microseconds.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>At the core, this is not so different from traditional | <p>At the core, this is not so different from traditional | ||

| programming. You write down code (e.g. a for loop) and you can repeat that | programming. You write down code (e.g. a for loop) and you can repeat that | ||

| action with machine precision. Still… when you do that, you <em>write down the logic</em>. | action with machine precision. Still… when you do that, you <em>write down the logic</em>. | ||

| We even have tools for formal verification (e.g. TLA+) that help explore the | We even have tools for formal verification (e.g. TLA+) that help explore the | ||

| entire state space to ensure that nothing dangerous happens. </p> | entire state space to ensure that nothing dangerous happens. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>Machine learning is a <em>little different</em>. To re-use a | <p>Machine learning is a <em>little different</em>. To re-use a | ||

| common separation, we have:</p> | common separation, we have:</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:image {"id":382} --> | <!-- wp:image {"id":382} --> | ||

| <figure class="wp-block-image"><img src="http://www.inscitia.com/ wp-content/uploads/Untitled- 1024x888.png" alt="" class="wp-image- 382"/></figure> | <figure class="wp-block-image"><img src="http://www.inscitia.com/ wp-content/uploads/Untitled- 1024x888.png" alt="" class="wp-image- 382"/></figure> | ||

| <!-- /wp:image --> | <!-- /wp:image --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>In the traditional approach, <em>people build the rules</em>. | <p>In the traditional approach, <em>people build the rules</em>. | ||

| In the machine learning approach – well, we don’t know the rules. </p> | In the machine learning approach – well, we don’t know the rules. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>A human may “sketch” the model – design the neural network | <p>A human may “sketch” the model – design the neural network | ||

| architecture, specify the functional form of the model – but the <strong>ACTIONS</strong> | architecture, specify the functional form of the model – but the <strong>ACTIONS</strong> | ||

| the model takes do not go by a human. There is rarely validation, exploration of | the model takes do not go by a human. There is rarely validation, exploration of | ||

| the full state space, etc.</p> | the full state space, etc.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>This <em>is</em> new – when we allow machine learning algorithms | <p>This <em>is</em> new – when we allow machine learning algorithms | ||

| to make significant decisions, we should be aware that they <strong>might not reflect | to make significant decisions, we should be aware that they <strong>might not reflect | ||

| human judgment</strong>. </p> | human judgment</strong>. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>This is <em>especially true</em> for marginalized groups, or rare circumstances. Uncommon situations are not reflected in the training data … and since machine learning models tend to learn <em>average</em> behavior that can be deeply problematic. Olivia Guest <a href="https:/ /twitter.com/ o_guest/status/ 1336968207127678976">offered a beautiful example</a> in response to this kerfluffle – when a machine learning system was asked to “reconstruct” a blurred image, it made black women look white – and in one case changed the gender. </p> | <p>This is <em>especially true</em> for marginalized groups, or rare circumstances. Uncommon situations are not reflected in the training data … and since machine learning models tend to learn <em>average</em> behavior that can be deeply problematic. Olivia Guest <a href="https:/ /twitter.com/ o_guest/status/ 1336968207127678976">offered a beautiful example</a> in response to this kerfluffle – when a machine learning system was asked to “reconstruct” a blurred image, it made black women look white – and in one case changed the gender. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:image {"id":383} --> | <!-- wp:image {"id":383} --> | ||

| <figure class="wp-block-image"><img src="http://www.inscitia.com/ wp-content/uploads/ Eo3df2rWMAE25M3- 852x1024.jpg" alt="" class="wp-image- 383"/></figure> | <figure class="wp-block-image"><img src="http://www.inscitia.com/ wp-content/uploads/ Eo3df2rWMAE25M3- 852x1024.jpg" alt="" class="wp-image- 383"/></figure> | ||

| <!-- /wp:image --> | <!-- /wp:image --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>This is shocking! It should <strong>not</strong> be expected that our | <p>This is shocking! It should <strong>not</strong> be expected that our | ||

| systems do this, and deploying them as-is is NOT OKAY.</p> | systems do this, and deploying them as-is is NOT OKAY.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:heading {"level":1} --> | <!-- wp:heading {"level":1} --> | ||

| <h1>We need to do better</h1> | <h1>We need to do better</h1> | ||

| <!-- /wp:heading --> | <!-- /wp:heading --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>If machine learning is going to have as big an impact as I <em>would | <p>If machine learning is going to have as big an impact as I <em>would | ||

| like</em> it to have, we need to do better. I like the take the <a href="https:/ /www.argmin.net/2020/06/29/ tour-revisited/">Ben Recht</a> has on | like</em> it to have, we need to do better. I like the take the <a href="https:/ /www.argmin.net/2020/06/29/ tour-revisited/">Ben Recht</a> has on | ||

| control systems and especially the kind of safety guarantees you need in places | control systems and especially the kind of safety guarantees you need in places | ||

| like aerospace engineering. </p> | like aerospace engineering. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>The bottom line is that machine learning has <em>fantastic | <p>The bottom line is that machine learning has <em>fantastic | ||

| potential</em> but is not quite reliable. </p> | potential</em> but is not quite reliable. </p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>We can compare this to aerospace – the first “autopilot” | <p>We can compare this to aerospace – the first “autopilot” | ||

| system came out 8 years after the first place, in 1912. But it wasn’t until the | system came out 8 years after the first place, in 1912. But it wasn’t until the | ||

| mid-1960s that autopilot systems were <em>really reliable</em>; and they were not | mid-1960s that autopilot systems were <em>really reliable</em>; and they were not | ||

| that widely used. Now it’s impossible to imagine flying a place (or a spacecraft…) | that widely used. Now it’s impossible to imagine flying a place (or a spacecraft…) | ||

| without them.</p> | without them.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

| <!-- wp:paragraph --> | <!-- wp:paragraph --> | ||

| <p>All that said – ethical issues are core to making machine | <p>All that said – ethical issues are core to making machine | ||

| learning <em>scalable</em>, as in usable for the society we live in. We need to | learning <em>scalable</em>, as in usable for the society we live in. We need to | ||

| build systems that work – and that means they need to be <em>safe</em> and <em>reliable</em>. | build systems that work – and that means they need to be <em>safe</em> and <em>reliable</em>. | ||

| It is not sufficient to ignore obstacles to safety and reliability like dataset | It is not sufficient to ignore obstacles to safety and reliability like dataset | ||

| bias, etc. Not can (most of) these problems be shunted off to “model | bias, etc. Not can (most of) these problems be shunted off to “model | ||

| post-processing” – it is a <em>core</em> part of the system.</p> | post-processing” – it is a <em>core</em> part of the system.</p> | ||

| <!-- /wp:paragraph --> | <!-- /wp:paragraph --> | ||

Note: Spaces may be added to comparison text to allow better line wrapping.