Recently, Google fired leading ethical AI research Timnit Gebru. Timnit was a manager

at Google’s Ethical AI Research division, having wrote a scathing

email to an internal listserv (Google Brain Women and Allies) as well as

sent an ultimatum to her grandboss (boss’s boss) Megan Kacholia. Megan (as

far as I can tell) reports to Jeff Dean, head of research.

Timnit was fired for behavior “inconsistent with the

expectations of a Google manager.”

Let’s talk about that, as well as about Jeff Dean’s public

responses.

What’s Happened

Timnit, one of the brightest voices in the Ethical AI field,

joined Google Research. Google hired her as a manager; we can speculate that because

Timnit has such a towering reputation in the field, Google wanted her to build

out a team and hire the best people in the field.

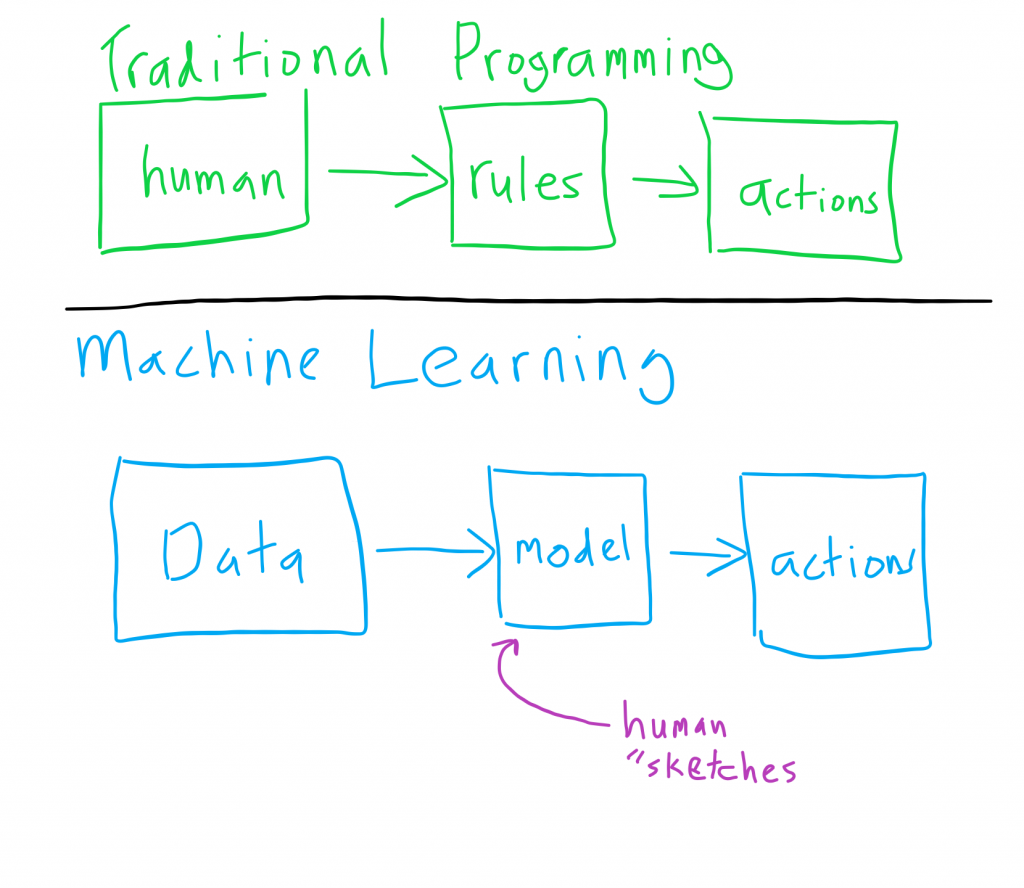

Google’s behavior reflects an enlightened self-interest. It

is surprisingly easy to build machine learning models that are not

ethical – reinforcing systemic biases – even for experts in machine learning.

This is not a new problem (advertising has known of the dangers of e.g.

including zip code in regression models for decades, due to redlining) but

as models get more complex, harder to debug, and more widely used having models

that are “ethical” is fantastically important. That’s both for an ethical reason

and also for a liability reason – it is not difficult to believe that, quite

soon, different regulatory environments across the world will start taking a

skeptical look at these (extremely profitable) models.

Once inside, as a manager, Timnit had two responsibilities:

first, to do excellent research (the same as being on the outside) and

second, to work in Google’s interest as a representative of the company.

This all came to a head when:

- Her management (this appears to be Megan,

her grandboss) blocked a paper from being submitted. Despite what Jeff

Dean says, this kind of thing has been rare, andwould have been

extremely surprising to everyone involved.

- Timnit got really pissed off – and quite

rightfully so. Blocking this kind of submission threatens Job Number #1:

doing excellent research, that Google hired her for.

- Timnit escalated all the way: in order to

indicate how important this was to her management (we can assume they weren’t

understanding why it was a big deal) she threatened to resign. This is

usually a signaling thing, i.e. I can no longer do my job effectively if this

stands.

- Timnit vents to an “ally” group, gripes that she

doesn’t seem to have the influence to do Job Number 2: work in Google’s

interests (as defined by getting Google to adopt ethical/diversity goals),

and floats the idea of external pressure.

- Google (Megan) fired her.

The paper under discussion has leaked and the abstract is here. It appears to be normal, even boring, but provides some additional insight. Basically, the paper points out that modern ML systems have a non-trivial environmental impact (this is known) and that ingesting random web data can encode unknown bias into the model (this is known). More research on this is good and expected.

It also suggests something about what happened

internally, e.g. why the paper was blocked. Let’s speculate that everyone was

operating in good faith.

- Legal and PR review the paper and find that it

points out specific flaws in models that Google is running.

- PR has nightmares of headlines after it comes

out – “Google Research says its own AI models have problematic bias,

environmental impact” and says “Absolutely not!”

- They inform Megan, who says something like “OK, good

to call out that risk, let’s make sure we add things to the paper about how

Google is ahead of these problems – we’ll miss this deadline, but OK” (Jeff

Dean cites this in his

public response)

- Timnit is furious (as above; plus, specific

conferences only happen once a year) and things go sideways.

Why did Megan fire Timnit?

Timnit broke two cardinal rules – rules of managerial ethics:

- Don’t increase the risk the company faces:

By threating external pressure (legal action) and promoting the idea to other

employees, Timnit is (by one interpretation) operating against the best

interests of Google.

- Don’t mark yourself as outside the team:

By threatening to resign, Timnit put herself on the other side of Google – i.e.

less interested in working to make Google successful, and more interested in

her own agenda. It is unacceptable to have a manager that does not subordinate

their own interests to the company.

It’s easy (as a manager) to look at the email sent

and say “Yep, that’s a firable offense. Completely unacceptable for an authority

figure to say those things, and deeply unethical to promote anti-Google

actions.”

Should Megan have fired Timnit?

My personal read is that while firing Timnit is

understandable, it was a mistake. It’s a sub-optimal management decision,

and feels more like a knee-jerk reaction to someone not accepting Megan’s power

and authority and daring to challenge her. People who have power sometimes get

a little too used to that power.

What should have happened?

- PR had valid concerns of the headlines, but the decision to block the paper from being submitted – especially since papers could be edited after submission – was bad. PR should not get to block scientific research: it violates a separation of concerns, and diminishes the value of the Research department (other researchers like your research less if they know PR and Legal have first say).

- PR should have waited for paper to be accepted, and then actively reached out to reporters to cover the paper – promote it! – and then point to additional research and work that Google has been doing to mitigate all of this. PR’s job is to manage the flow of information from Google to the public – it should have managed that.

- Megan should have made that happen. Allowing PR/Legal to block the paper is a bad decision for someone to whom the Research department reports to.

- Timnit signaled that this was very important: Megan should have taken that as a signal for how extreme a step blocking the paper is, and not as a threat to her own power (or to Google).

- By escalating this, ironically, it is easy to argue that Timnit was operating in Google’s best interests. If people know that PR/Legal can block your papers (especially ethical AI papers!) the value of the Research you do degrades. That is, the research team turns into an expensive extension of the PR department.

- Megan should have consulted Timnit’s manager. Remember, Megan is Timnit’s boss’s boss. The firing should at least have come from Timnit’s manage, and it’s possible Timnit’s manager could have de-escalated more easily.

- Timnit should not have sent that email to the internal Women and Allies listserv; however, absent this other stuff that doesn’t quite seem fireable. It’s more an opportunity for internal coaching and training – why you shouldn’t do that, how to channel that frustration, and how to be more effective internally. Sending such an email to an “ally” group is much more acceptable than sending it to, say, your direct reports or your broader team. In the first one, you are speaking in a “safe space”; in the other, you are exerting your role as a manager and infusing communication with corporate authority.

Finally, firing Timnit raised the stakes and enabled a much larger public blowback than allowing the paper to be published would have. To put it another way, the paper represented a small PR threat: firing Timnit was a much larger PR threat. I would call that “a mistake.”

Management holds all the power here: continuing to escalate as a manager is not a good look. Part of managements’ job is de-escalation.

Jeff Dean inherits the mess

It’s quite likely that Jeff Dean knew none of this before Timnit

was fired, and there was a public backlash.

He now has multiple problems:

- It’s really, really important that researchers

still at Google don’t take this the wrong way – that Google is not the place to

do research. Google wants to hire the best people, and do the best research. It

can’t do that if this has a chilling effect on the work people are doing.

- It’s really important that other people don’t

believe that PR and Legal can block Google’s research papers, because that

would undermine the value of the enterprise.

- Jeff Dean wants to protect the team he has

– which includes Megan. I don’t know for sure, but we might believe that Megan

has worked for Jeff for a long time and he values her tremendously. Even if

this was a mistake, everyone makes mistakes.

Jeff Dean’s public

response tries to thread that needle without admitting wrongdoing.

Admission of wrongdoing would be easy, but it might not protect his team.

So he tries to reframe the review process; reframe why the paper was blocked;

and put it in context of the wide work that the entire team is doing. These are

all good things to do.

Good for Jeff Dean: as expected, he’s doing a great

job.

Where do people go from here?

I’m not really sure. I hope Timnit continues to do great

work, and I hope she can find a way to be more effective.

My own personal view is that there needs to be a really big

shift, something similar to the shift that Control Systems research went

through in the 1960s – 1980s around safety. Right now people

(engineering, product managers, etc) tend to look at “incidence” (oh this

only happens 3% of the time, NOT A BIG DEAL); rarely at conditional

incidence (this happens 90% of the time for this subpopulation, which is

3.5%), and almost never safety (this should NEVER happen).

I’m a big proponent of AI/ML – I think that automatic decision

systems are as transformative to society as the invention of things like writing,

the printing press, and engines. Figuring out how to do this well is hard, and

we need people to tackle these hard problems. Timnit has been tackling some of

them: work like that needs to continue.

Appendix: A Cry for Help

As a minor note, the email that Timnit did send to Women And

Allies listserv sounds a bit like a cry for help. She points out that she’s

found it hard to be effective at Job Number 2: work in Google’s best

interests. Let’s look at this one line:

I understand that the only things that mean anything at Google are levels, I’ve seen how my expertise has been completely dismissed.

That implies that when Timnit took research or

questions to other parts of the organization (i.e. talking to Directors to

enable Google to tackle ethical/diversity problems before) she was dismissed because

she was “only” a manager. This is despite her comparative expertise: she

had expertise they did not.

The other complaints (difficulty hiring women; ignoring opinions

from marginalized groups; inability to get Google management to care about

these issues) all suggests a role mismatch: Google was asking her to

leverage her expertise (worldwide leading ethical AI research) but had not

given her organizational remit/power to do that work. Obviously, this is a hard

needle to thread: the right answer is probably some kind of change management.

Some obvious ideas:

- Change through organizational hierarchy: If researchers cannot effectively drive change on implementation/engineering teams, then create a mechanism where research highlights issues, and then push those up-and-down (up through Research management, down through Eng).

- Change through process:If other teams (e.g. Eng) ignore researchers that don’t have the right title (e.g. “Director” or “VP”) then given researchers something like a veto power. That’s the nuclear option – an easier thing would be to just allow research some amount of time (3 months?) to be able to hold things “under review.” Effective teams would hate that and probably work with researchers in advance to prevent that from happening.

- Change through incentives: Change the promotion system / bonus review system to include “reviews” of the work that engineering teams have done; doing something that ignores research could then “neuter” the value of the work. People not getting promoted changes incentive systems very quickly indeed.

- Senior-ize some researchers: Just give some researchers (or ethical AI people specifically) “undeserved” organizational power.

These solutions all assume that Google really cares about having its deployments on the cutting edge, or at least not doing obviously flawed things. That’s not guaranteed! They could, for interest, be more interested in making more, protecting their monopoly, defending from regulatory options, etc.

Appendix: A Lesson for PR Departments

It sounds like Legal/PR review used here is basically identical to the review process used for general publication that employees write, e.g. if an employee is writing a piece to appear in a magazine, or is doing some document they want to publish.

In those cases, there is often no real external deadline; or PR might be in control (wanting to “time” the publication). Plus, the work being done is done for the sake of the organization — perhaps a fringe benefit is employee reputation. Moreover, the expected audience is large, and general (PR employees know how reporters and the public will respond a lot better that most employees).

These assumptions are not true for research papers. The public does not read research papers: they are read by other experts in the field. Reporters are unlikely to read the paper – especially if you give them a nice 3-paragraph explanation.

That means that editing or blocking the paper doesn’t really serve the same purpose as “normal” review. Rather, rapid response in parallel with paper submission would be more effective.

The exception is work that directly damages the company, e.g. estimating some negative effects that current company policies have. Having that in a journal article or conference paper would make mitigation (in court or the news) nearly impossible. That implies that blocking or delaying papers should have a much higher bar in research papers than for other public communications.